2022 Evaluation Process

Overview

Each year, Animal Charity Evaluators (ACE) spends several months evaluating animal advocacy organizations to identify those that work effectively and are able to do the most good with additional donations. This post describes the process that led to our 2022 charity recommendations.

Our 2022 evaluation process took place from June to November:

- June: We selected and invited charities to be reviewed.

- July to September: We gathered information from charities and drafted comprehensive reviews.

- October: We made recommendation decisions and shared drafts of reviews with charities.

- November: We addressed charities’ feedback and finalized reviews.

- November 22: We published our recommendations.

This process led to the publication of 12 new or updated comprehensive reviews, along with an update to our list of recommended charities.

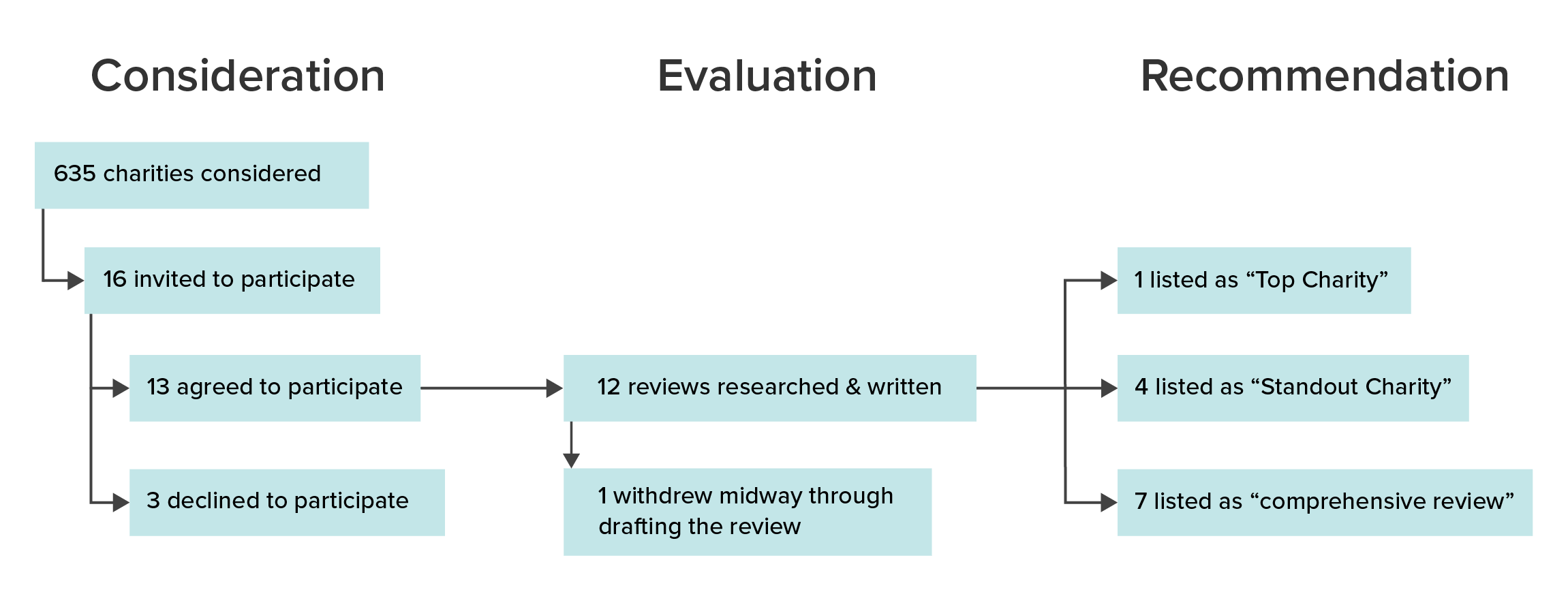

Fig. 1: Flowchart depicting ACE’s 2022 charity evaluation process

| Consideration | Evaluation | Recommendation |

| 635 considered | 12 reviews researched & written | 1 listed as Top Charity |

| 16 invited to participate | 1 review started but was not able to be completed for unforeseen reasons | 4 listed as Standout Charity |

| 13 agreed to participate | 7 listed as comprehensive review | |

| 3 declined to participate |

Charity Selection

Because we re-evaluate recommended charities every two years, our list of organizations for 2022 began with six charities that were already scheduled for re-evaluation. This year, our target was to complete comprehensive reviews of 13 charities,1 so our first step was to select the additional seven.

Selection began with a list of 635 animal charities, which we narrowed down using our quantitative model.2 We made sure that groups we thought were most promising were included, such as Movement Grants applicants and charities that specifically requested to be considered, arriving at a shorter list of 58 “considered” charities. The evaluations committee then used a process of iterative discussion and voting to prioritize the list for sending out invitations.

By the end of the selection process, we invited a total of 16 charities to participate, and only three charities declined to be reviewed (19% decline rate, compared to 52% last year). This year’s cohort of 13 charities included the six Standout Charities that were last evaluated in 2020, one charity that was last evaluated in 2021, and six other charities that were all past Movement Grants applicants and/or grantees. Unfortunately, one charity had to withdraw several weeks into the evaluation process, one charity had to withdraw, bringing our final cohort down to 12.

How We Gathered Information

After charities agreed to participate, we asked each of them to provide documentation about their programs, accomplishments, finances, leadership, and culture. Additionally, we distributed a survey to each charity’s staff members (and volunteers, for some), which helped us gain a better understanding of their perspectives on their organizations’ leadership and culture. If we had any questions about their submissions, we worked with representatives from each charity to ensure that we had a clear understanding of their work. We then used the information we gathered to assess each charity based on the criteria outlined in the next section.

Our Evaluation Criteria

We evaluated charities on the same four criteria as we did in 2021: Programs, Cost Effectiveness, Room for More Funding, and Leadership and Culture. However, in 2022, we updated the way we assess all four of them. Below, we outline this year’s approach and identify how it differs from the previous year. For more details on changes to our evaluation criteria in 2022, please visit our evaluation criteria page.

Programs

This year, we introduced a scoring framework to assess the effectiveness of charities’ programs, building on the four categories we used in 2021 (animal groups, countries, outcomes, and interventions). We used the Scale, Tractability, and Neglectedness (STN) framework to score different types of animal groups, countries, outcomes, and interventions based on their priority level; for countries, we also included an assessment of global influence.3 Using those scores and information supplied by the charity about their use of program funding, we arrived at a singular program score for each charity, representing the expected impact of their collective programs.

Cost Effectiveness

To assess the cost effectiveness of charities’ programs, we considered their approaches to implementing interventions (using the intervention scores from the Programs criterion), their recent achievements, and the costs associated with those achievements. As with the Programs criterion, we adopted a scoring framework to assess cost effectiveness this year. We began our analysis by comparing a charity’s reported expenditures over the past 18 months to their reported achievements in each of their main programs during that time. We then weighted each program proportional to its cost to arrive at an overall cost-effectiveness score for each charity. We also verified select achievements reported by charities using publicly available information, internal documents, media reports, and independent sources.

Room for More Funding

The RFMF criterion aims to investigate whether charities can absorb and effectively utilize funding that a new or renewed recommendation may bring in. We ask charities to estimate their projected revenue and plans for expansion for the next two years, assuming that their ACE recommendation status and the amount of ACE-influenced funding they receive will stay the same. This year, we also began assessing our level of confidence in charities’ projections and the feasibility of their expansion plans if they were to receive funds beyond their predicted income. We used those assessments to estimate their RFMF, adding additional funding to replenish their reserves where applicable.

Leadership and Culture

In this criterion, we examine the information provided by charity leadership, results of our culture survey, transparency regarding leadership and governance, and any unsolicited testimonials or whistleblower reports we receive. However, because ACE is not a watchdog organization, and because we lack the expertise and capacity to conduct investigations into any whistleblower reports we receive, our only aim is to determine whether there might be issues in a charity’s leadership and culture that have a negative impact on staff productivity and wellbeing. Unlike last year, we used an internal scoring framework4 to facilitate detailed discussions about the leadership and culture of the charities under review this year and compare charities with each other. In the end, we noted whether we had any concerns regarding the charity’s leadership and culture.

How We Made Recommendation Decisions

To select this year’s recommended charities, the ACE research team gathered each charity’s assessments and scores, ranked them, and assigned a recommendation status of Top Charity, Standout Charity, or comprehensive review (not recommended).

This year, we used an iterative ranking approach in place of a simple voting process. The new process honors the fact that disagreements between committee members are inevitable and expected—rather than disagreements causing a roadblock (e.g., needing to decide what to do in cases of a split vote), they are now part of the process. In other words, committee members do not need to agree or reach a consensus to generate a ranked list of charities. We outline all of this in detail below.

Stage 1: Evaluation committee members scored each charity on a scale from 1–7 (1 = strongly reject, 7 = strongly recommend) using the criterion scores/assessments as their guide. This was done independently and anonymously. Individual team member scores were automatically sent to a combined scoring spreadsheet, where charities were ranked based on the scores they received.

Stage 2: Evaluation committee members discussed their scores and made their case about why they felt a given charity should be recommended or not.

Stage 3: Evaluation committee members were encouraged to adjust their original recommendation scores asynchronously (based on the perspectives of others) and submit a second set of scores independently and anonymously. Again, adjusted individual scores were automatically sent to a combined scoring spreadsheet.

Stage 4: Evaluation committee members discussed the final rankings, decided which charities would be recommended, and assigned Top Charity, Standout Charity, or comprehensive review status to charities. This year, all Top and Standout Charities received a score of at least 5 (out of 7) from all evaluation team members.

The below table contains the final scores and where the team decided to draw the cut-off for recommended charities this year.

| Charity Name | Researcher A | Researcher B | Researcher C | Researcher D | Researcher E | Average |

| Charity 1 | 7 | 7 | 5 | 7 | 7 | 6.6 |

| Charity 2 | 6 | 6 | 6 | 6 | 5 | 5.8 |

| Charity 3 | 6 | 6 | 5 | 6 | 6 | 5.8 |

| Charity 4 | 5 | 5 | 6 | 5.5 | 6 | 5.5 |

| Charity 5 | 5 | 5 | 5 | 5 | 6 | 5.2 |

| Charity 6 | 5 | 5 | 3 | 4.5 | 5 | 4.5 |

| Charity 7 | 3 | 3 | 5 | 3.5 | 5 | 3.9 |

| Charity 8 | 3 | 3 | 3 | 2 | 3 | 2.8 |

| Charity 9 | 3 | 2 | 3 | 2 | 3 | 2.6 |

| Charity 10 | 2 | 2 | 3 | 2 | 3 | 2.4 |

| Charity 11 | 2 | 3 | 2 | 1 | 2 | 2 |

| Charity 12 | 1 | 1 | 3 | 1 | 2 | 1.6 |

Once decisions were made, we finalized each charity’s draft review and sent them to charities for feedback and approval. Charities were given the opportunity to request edits, including removing confidential information or correcting factual errors. That said, all of our reviews represent our own understanding and opinions, which are not necessarily those of the charities reviewed. This year, all 12 charities for which we drafted reviews agreed to have them published.

Recommended Charities

Out of the 12 charities that participated in our 2022 evaluation process, one earned the status of Top Charity (joining three others from 2021) and four earned the status of Standout Charity (joining seven others from 2021). These charities will retain their recommendation status until 2024. See our 2022 recommendations announcement to learn about our new recommended charities!

Participation Grants

We offer our sincere gratitude to each of the charities we evaluated this year. Participating in our evaluation process takes time and energy, and we are grateful for their willingness to be open with us about their work. To that end, we will award participation grants of at least $2,500 to all charities that participated in our 2022 evaluation process. These grants are not contingent on charities’ decision to publish their review: We award participation grants to charities whose reviews we do not publish, assuming they made a good faith effort to engage with us during the evaluation process.

We typically aim to complete comprehensive reviews of 15 charities each year, but due to limited staff capacity, we aimed for 13 in 2022.

For more information about the quantitative model, see our blog post detailing the process leading to 2021 recommendations.

Our methodology for scoring countries uses Mercy For Animals’ Farmed Animal Opportunity Index. However, ACE uses different weightings for scale, tractability, and global influence, and we also consider neglectedness as a factor..

ACE’s leadership and culture scoring framework is internal to protect confidentiality. In the future, we hope to share a public version of this scoring system.