Things that surprised us about the December 2015 recommendation process

Each time we go through the evaluation process, we gain new insights that allow us to improve our research. We feel it’s important to share these with our audience in order to solicit feedback, explain potential changes in future evaluations or our general research strategy, and increase the transparency of our decision process. In this post, we present some of the things that we found surprising after completing our December 2015 recommendation process. Although some things surprised more than one of us, there was enough variation that each staff member active in the review process has included what surprised them individually.

Jon Bockman, Executive Director:

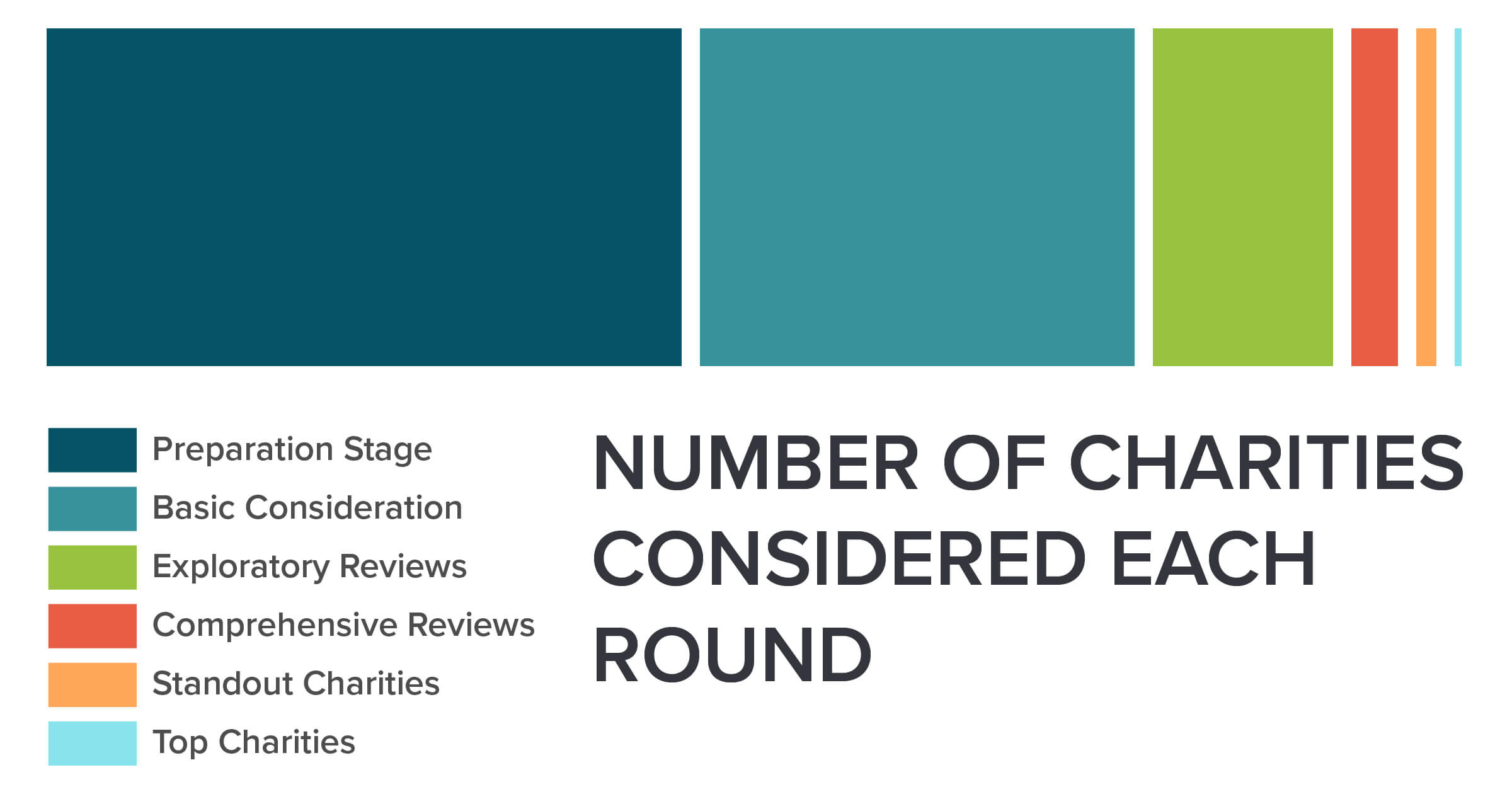

Small number of groups allowing publication of shallow reviews

As we continue to widen our scope of consideration to look more closely at new charities, we find it harder to discover new charities that work in a way that would make them likely candidates for a recommendation. Given this wider scope of consideration, new groups that we review at a shallow level seem to be more likely to disagree with our assessment of impact, which likely played a role in our inability to publish reviews for a larger percentage of organizations in 2015. This makes us think we might need to reconsider our selection process for future rounds of evaluations, as it was surprising that we were only able to publish six shallow reviews.

Quantity of standout charities

Related to the previous question, we were also surprised at the high percentage of standout organizations that we decided on from our total number of medium reviews. This significant increase makes sense in some regard; we decided to look at charities doing work in novel or particularly neglected areas this year. Because we had not considered any charities doing these sorts of work before, that led to us finding some outstanding groups working on important and possibly undervalued projects. We want to recognize these efforts and draw attention to those specific types of advocacy to encourage more exploration of them, so we decided to add 5 additional standout charities to our total list. This brings our current list of standout charities to 9.

We previously stated on our blog that we intended to expand the number of standout charities. However, it was still surprising that we decided to give a standout recommendation to 5 of the 6 charities that we conducted a medium review on during this round of recommendations. On some levels, this makes sense; we select groups for a medium review because we have an interest in learning more about their work, and because we think they have a higher chance of being selected for a recommendation than other charities on our list. However, the high percentage of medium-reviewed charities that we selected as standouts raised a concern for future rounds of evaluation regarding our overall selection process as well as whether or not we are weakening the overall strength of our recommendations by naming a higher number of groups.

This makes us consider if we will need to revise our recommendation criteria or selection process in the future, and leads us to ask ourselves an important question: Do we want to cap the number of standout charities? There are positive benefits to continuing to increase our number of standout charities, but there are also drawbacks, such as potentially diluting our recommendations or causing confusion among our followers. We intend to discuss our current number of standouts and whether or not we want to cap the total number in this category in early 2016.

Allison Smith, Director of Research:

Internal disagreement about recommendation decisions

As the ACE staff grows, more people will contribute to the recommendation process. We found that the members working on evaluations this year had several disagreements about which organizations deserved to be recognized as standout or top charities. We were able to consolidate our thinking in a way that enabled us to agree on our official selections, but compared to previous rounds, we came into the conversation about which groups to recommend with much less common ground.

Although this surprised me, in retrospect there are good reasons for it. Our recommendation process has a lot of subjective elements, and as our research team grows and there are more people involved in the recommendation decision process, more perspectives will be represented and there will be more disagreements. Additionally, the groups we evaluated this year differed in more ways than the groups we reviewed last year, so disagreements about which programs should be prioritized and which of our criteria are most important were more likely to result in disagreements about which groups should be recommended.

If we look at disagreement about which groups to recommend as a problem, we might want to solve it by making our recommendation process more objective, so that we will have a process which gives a single answer. However, making our process more objective might just make it more predictable and not necessarily better at recommending the organizations which are doing the best work. Setting up more objective criteria would move more of the subjective judgements to the question of which criteria we should use, but it wouldn’t eliminate them entirely. I am inclined to think our internal disagreement is actually beneficial, because it forces us to consider our choices more carefully. I feel more confident in our current list of top and standout charities than I would feel in any of the lists we came into the meeting with, including my own. If we change our process in response to this experience, I think we should do so to extend the discussion about which groups to recommend, so that we can take more time to respond to each other’s concerns about our favorite organizations and to learn from each other’s perspectives.

Lack of success finding promising groups in China

This year we made a much more thorough effort to find local groups working in China than we had in the past. With the aid of a list compiled by another animal advocate, we briefly considered over 300 Chinese organizations. I was surprised that this didn’t result in a single published review, or even a single group which responded to our contacts. The drop-off at each stage of the process seemed reasonable, but I hadn’t predicted that the cumulative result would be so extreme.

I think there were two factors at play here: first, that only a small fraction of the groups we looked at were groups we would want to review; second, that we don’t speak or read Chinese, and there are many groups which can’t or don’t provide information in English. In China, as elsewhere, a majority of organizations work on programs we don’t think are especially likely to be cost-effective, and we didn’t try to review these groups. In addition, we didn’t try to review any groups which didn’t have substantial English content on their websites, because we don’t speak or read Chinese. When we tried to review groups, our attempts to contact them were made in English, and it’s possible that even groups with some English language content online were not prepared to receive and respond to email in English, for instance if the English content was put up originally by someone who is no longer actively involved with the group. Ultimately, we contacted only a few groups, and none of them responded to any of our emails.

I now feel unsure we’re going to be able to really productively consider local groups working in China. The language barrier seems to present a more substantial problem than in India or Europe, where more people speak English. It’s possible that there are other factors in the structure of local activism in China that would also make groups less interested in working with us, but I don’t think we can really understand such things without overcoming the language barrier. I think that to do a good job finding local groups working in China and considering them for recommendations, we would need to hire a Chinese-speaking member of our research staff, which would be a substantial commitment.

Jacy Reese, Research Associate:

The importance of organization and leadership for charity evaluation

This was my first time participating directly in our recommendation process. Before becoming a staff member, I knew we did not publish any reviews without the approval of the organization and that this would mean some important evidence and reasoning would be left out of the public version. I didn’t have strong views beforehand on how much and what sort of information would be left out, but I have a much better understanding of that now.

Most interestingly, I didn’t think about how our reviews can include only limited information on organizational and leadership quality, so the importance of this information surprised me. This information could include beliefs such as:

- “This organization has charismatic, well-spoken leadership. We’d be excited for them to appear on television or write articles that lead the animal advocacy movement.”

- “We’re concerned this charity is poorly organized and therefore might have difficulty adapting their strategy to a growing animal advocacy landscape.”

This factor varies substantially between organizations and seems to make a substantial difference in their capabilities, and I don’t think our reviews capture that variation, which makes sense because this consideration is so sensitive. It seems entirely reasonable for an organization to not want us to publish specific critical statements about their leadership or internal organization quality. Of course, we can include positive statements about this consideration, although there is a concern that organizations for which we don’t share positive statements will interpret that as a negative view, which won’t always be the case.

One possible consequence of this is that if someone in our audience disagrees with our conclusions about outstanding giving opportunities, they should defer to our reasoning because we have more evidence than we can share with the public. This seems reasonable to me, although I think it’s a fairly limited consideration and wouldn’t apply to all disagreements.

The imprecision of cost effectiveness analyses

We have several disclaimers in each of our reviews noting the sizable uncertainty in our quantitative cost-effectiveness estimates. Although I came into my position at ACE already very skeptical of this kind of evaluation and wouldn’t say the imprecision has surprised me in itself, I have gained a greater appreciation for it. Most notably, I’ve gained a greater appreciation for our uncertainty in even the estimates about which we’re most confident.

An interesting source of uncertainty is the potential variation in how different organizations come up with analogous figures, such as leaflets distributed or media views of investigations. Some of the figures reported to us by charities this year seemed wildly implausible to me. Even though we chose not to use those figures in our cost-effectiveness calculations, I think similar differences could exist in smaller, less obvious ways.

For this reason, I worry that part of our cost-effectiveness estimates might just capture which charities have the most optimistic internal estimation procedures. This is one of many reasons I feel our quantitative estimates should play a relatively small role in our evaluation process.

Filed Under: Recommendations Tagged With: charity evaluations, our thinking

About Allison Smith

Allison studied mathematics at Carleton College and Northwestern University before joining ACE to help build its research program in their role as Director of Research from 2015–2018. Most recently, Allison joined ACE's Board of Directors, and is currently training to become a physical therapist assistant.

China – why wasnt Animals Asia considered?

I’ve supported them for years, and as far as I can tell they’ve done a lot of good e.g. in working to eliminate the bear farms and more recently on humane treatment of dogs. There are any number of smaller organizations on the front lines who desperately need support. So I must say although I think your recommended charities are fine, I am a bit skeptical of your methodology.

Hi David,

We did run across Animals Asia, but because of their focus on helping companion animals and running sanctuaries, we haven’t considered them in more detail. There are so many groups doing this kind of work that we can’t consider them all, and we don’t think it’s likely to be as effective as advocacy for farmed animals, so it’s not a high priority for us to increase the number of these groups that we have reviewed. (Our FAQ has some more details on our thinking about these issues. https://animalcharityevaluators.org/about/background/faq/

If you think that Animals Asia is likely to be a good candidate for our recommendations even though most groups doing similar work aren’t, I’d encourage you to request they be considered using our contact form: https://animalcharityevaluators.org/about/contact-us

>diluting our recommendations or causing confusion among our followers

Yeah, this is definitely an issue, IMO.