December 2014 Evaluation Process

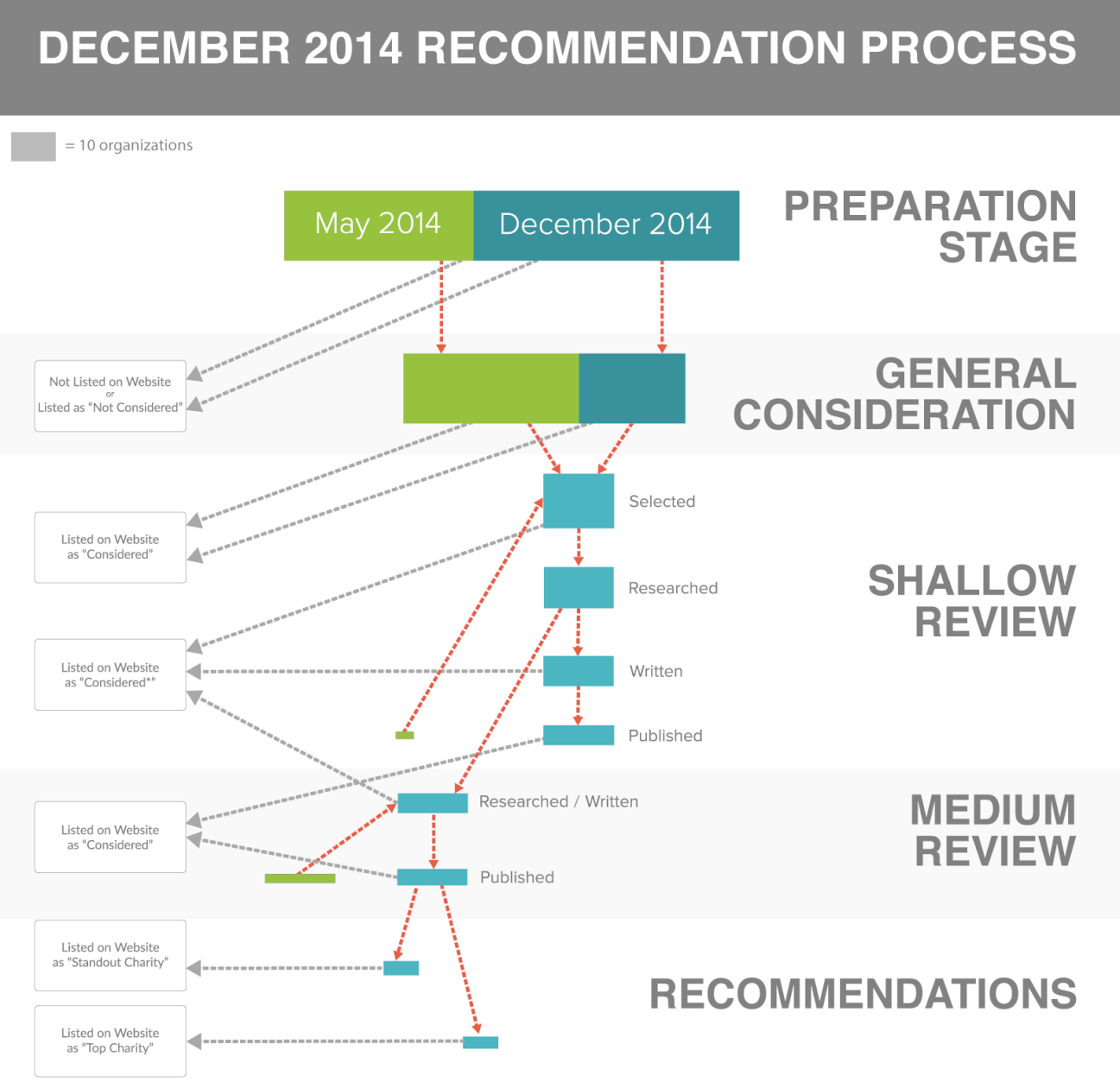

This page discusses the specific process that led to our December 2014 recommendation update; we discuss more general features of the process elsewhere.

Overview

ACE regularly conducts evaluations of charities at a variety of levels of detail; we update our recommendations periodically as a result of these evaluations. Prior to December 2014, our most recent recommendation update took place in May 2014. We began preparing for the December 2014 update immediately after those recommendations were released in mid-May. Our first step was to do some general research to improve our recommendations:

- We finished our intervention evaluation template, allowing us to more thoroughly research specific interventions outside of our reviews of particular organizations.

- We applied our intervention evaluation template to conduct a review of corporate outreach, an intervention common amongst organizations we anticipated seriously considering for recommendations.

- We researched differences between countries in laws related to animal welfare and in attitudes related to animals, as preparation for evaluating organizations working outside the United States. An intern with a legal background researched the animal welfare laws of the United States and several other key countries and subsequently public attitudes towards animals and meat consumption for the same countries, producing reports for internal use.

We spread the basic consideration phase of our review cycle from mid-May through July. This phase took longer than it might in the future, because this was the first time we considered groups working outside the United States.

We conducted shallow reviews starting in July. Some communications regarding shallow reviews extended until November.

We conducted medium reviews starting in August. All medium reviews were completed in late November.

We published our recommendations on December 1st, 2014.

ACE’s Executive Director and Research Manager did most of the work on this round of reviews, with help from interns during the basic consideration round and in transcribing conversations, and from ACE’s Director of Communications in publishing the results.

Basic Consideration

We considered organizations from several sources during this round. Interns reviewed lists of animal activist organizations from sources like the World Animal Net Directory, adding many new organizations and websites to our internal master list, most of which were based outside the United States. ACE staff and interns traveled to Taking Action for Animals and the Animal Rights Conference, at which we received requests to be considered from some groups, and recommendations about groups to consider from experts we’d consulted since beginning the previous round of reviews. Finally, we revisited organizations that we had considered but not reviewed in the past.

Our internal master list at the end of this process included 252 organizations and websites, 147 more than the 105 organizations and sites we listed internally at the end of the previous review process. We added 59 of these to the 97 previously included on our published list of organizations, bringing the total number on that list to 156 (lower than 252 for reasons discussed below). All 59 of these organizations were added to the “Charities Considered” section on the list, or (based on later review) to our top charities or standout charities lists.

During this round, our internal master list grew much more than our public list of charities considered did. We attribute this partially to the fact that this was the first time we considered charities working outside the US, and our method of doing so led to several common results that we did not think indicated a group should be included on our public list:

- The organization’s website was not available in English or in an English version. We did not have the resources to fairly evaluate such organizations in this round of evaluations. We also did not have the resources to reliably determine whether these sites actually corresponded to animal advocacy groups, unless we happened to read the language of publication or we trusted a machine translation. We decided it would be fairest and most consistent simply not to list most such organizations at this time. We did list some organizations whose websites were in languages ACE staff could comfortably read.

- We determined that the website actually did not correspond to an active animal advocacy group, or could not find a current website for the organization. Because we used other lists on the internet to compile our master list and these are usually not perfectly maintained, some organizations changed names or websites or disbanded and we could not find them anymore. Sometimes websites seemed to belong to an individual, or clearly belonged to a specific project of an organization already on our list. We keep such listings on our internal list, so we know we have already researched them if we find another reference to them, but we do not add them to our public list.

- The organization provides direct care to animals as their main program. We currently list some such groups, but we do not expect ever to have a comprehensive list of them, particularly because there are a very large number of local companion animal shelters and humane societies. We can often identify such groups in the United States by their names alone without visiting their websites, but are not as proficient with groups operating elsewhere. In this round we generally chose not to list such groups on our published list even if they are on our private list, especially if they provide direct care to companion animals.

Additionally, because we looked at many groups and websites and there were several reasons not to add all of them to the public list, there may be some we didn’t add to the public list but would have if we had been able to spend more time thinking about how to include or exclude groups consistently and whether each specific group should be listed. We plan to spend some time making this type of decision more carefully structured before our next review process. The organizations which we did add to the public list include all those we flagged to consider further reviewing in this round (see the section on shallow reviews) and all those which we were specifically requested to consider by the organizations themselves, as well as some that were referred to us.

Shallow Reviews

We set a target of conducting around 30 shallow reviews during this round, including any shallow reviews that were updates to shallow reviews we had conducted for the previous round.

Selecting organizations to review

We compiled a list of possible organizations to review from the organizations considered in the previous round. We flagged groups for possible inclusion in this round if:

- We had previously conducted a shallow review of the organization and thought that review should be updated based on our increased knowledge about animal activism outside the United States.

- The organization focused on farmed animals, with at least some major projects that did not involve caring directly for the animals.

- The organization addressed a variety of groups of animals, and appeared to have a majority of its programming directed towards helping farmed animals.

- The organization addressed a variety of groups of animals, and appeared to be large enough that it might be valuable to analyze only the branch of its activities dealing with farmed animals.

- The organization addressed another animal issue from an unusual and possibly exceptionally effective perspective.

- An expert whose advice we had solicited had recommended that we review the organization.

We did not flag groups which were not clearly still active or which did not seem to have enough information online that we could plausibly review them (including if too little of the information they had online was in languages we could understand). At this point we had 41 organizations on our list; some fit more than one of the descriptions above. 40 were organizations we had not previously reviewed. In order to meet our target, we removed from the list:

- Four organizations which did not have English versions of their websites or substantial English sections of their websites.

- Two organizations which appeared to be active locally, not at a national or international level.

- Two organizations with interesting programs not focused specifically on farmed animals, which we thought would be interesting to investigate but might require more time or specialized knowledge than we had available.

- Two organizations with programs addressing a variety of animal issues, whose farm animal programs we considered interesting but whose other activities we were less impressed by.

- One organization which we decided at this point did not have sufficient information on its website to make a review worthwhile.

At this point, we had 30 organizations on our list for shallow reviews, one of which was an update to a previous review.

Conducting reviews

We conducted reviews for each of these organizations based on their website and other publicly available information. Although we do not generally contact organizations during the early part of the shallow review process, we consider basic financial information such as assets, revenues, and expenditures for recent years crucial to the formation of an accurate review. Nonprofits registered in the United States, and in some other countries, submit standardized financial information to the government, and in some countries this information becomes easily available to the public through the government or through third parties such as Guidestar. However, we were not able to find this information for all organizations, either through their websites or through standardized sources (which did not seem to exist or provide the same information for all countries). Early in the review process, we contacted the 11 organizations for which we could not find basic financial information to request it. Of these, 4 replied with enough information that we could proceed and were treated as the other groups we were reviewing from this point forward. The other 7 either never responded to our contact or requested not to be evaluated, and we marked them as “declined to be reviewed” without proceeding further in the review process.

Before writing the shallow reviews, we selected 6 of the shallowly-reviewed organizations to proceed to the medium review process (see below). We did not write shallow reviews for these organizations. We wrote a total of 17 shallow reviews, including the update to a previous review. We then sent these to the organizations involved for corrections and approval. Ultimately, 11 of these groups allowed us to publish versions of our reviews. Reviews which we altered after communicating with the organization involved still represent our own understanding and opinions, which are not necessarily those of the group reviewed. The other 6 groups either requested not to be reviewed or did not reply to our attempts to contact them: not all had seen the text of our review when they made that decision. We marked all of these groups as “declined to be reviewed”.

Medium reviews

We set a target of conducting 6 new medium reviews, and updating any of the 6 medium reviews from May 2014 that the organizations wanted updated. We chose to offer the option of updating or not updating the May 2014 reviews because the two rounds of reviews were happening close enough together that some information would certainly be the same (for instance, most organizations in each round provided us with their 2013 budgets as the most recent year for which they had complete information), but we knew some organizations would have recent accomplishments, and we had questions for each organization about the programs they had been working on when we spoke to them in the spring.

Selecting organizations to review

We notified the 6 organizations we had conducted medium reviews on for our May 2014 round that they had the option of conducting another conversation with us or providing documents to us in order to update their reviews. They self-selected into or out of their reviews being updated. Four, including both of our top charities from that round, decided to conduct additional conversations and provide documentation afterwards as needed; one decided only to provide documentation; and one decided not to have their review updated.

To select new charities on which to conduct medium reviews, after researching the shallow reviews but before writing them, we had a meeting. Each of us (Allison and Jon) individually composed a private list of organizations for possible review, indicating how strongly we felt that each group we listed should be reviewed. The two lists overlapped heavily; in total we listed 14 organizations, 9 of which appeared on both our lists. The organizations we most wanted to review also overlapped heavily; all of them were on both lists, although in some cases only one of us felt strongly that they should be reviewed.

We discussed every organization on each list and decided on 6 organizations to approach regarding a medium review, as well as 3 alternates in case some organizations did not want to participate. We deliberately chose some organizations working in different locations or on different projects than those of our then-recommended charities, in case this would provide an opportunity to make stronger recommendations than we already had. 5 of the 6 organizations we approached agreed to participate. We had approached the 6th organization regarding a medium review in the May 2014 round and they had said that they were not ready. They again declined to participate in a medium review in this round, so we retained the shallow review for them that we had published in May. We approached the first group on our list of alternates to fill this slot, and they agreed to participate.

Conducting reviews

We conducted the new reviews according to our general process for medium reviews. Updates to existing reviews followed a similar process, except that our conversations focused more closely on specific areas of interest, rather than trying to get an overview of the whole organization’s activities, and in one case we skipped the conversation and worked entirely based on text documentation.

We provided organizations with conversation summaries, reviews, and other documents as we decided what we wanted to publish about each group and what our recommendations would be. Two of the organizations we were reviewing for the first time decided not to allow us to publish any of this material. To preserve these organizations’ privacy, we have obscured some details regarding our decisions about which groups to investigate in the medium review round. They are listed as “declined to be reviewed” on our list of organizations. Four new organizations, and the five organizations whose reviews we updated, did allow us to publish reviews. Summaries of conversations we had with their staff are also available separately.

We were surprised that some organizations did not want us to publish any version of our review or the conversation we had with them; this situation had not arisen in our May 2014 round of reviews. While we learned a lot from reviewing the organizations that chose not to have their reviews or conversations published, we were disappointed not to be able to share what we learned with our audience. We’re reviewing the way we communicate with groups about what we hope to publish, with the hope of avoiding this situation in the future. We’re also considering what role the likelihood of being able to publish a review should play in our decisions about what groups to review.

Recommendations

After the research on the medium reviews was finished, but before the writing was finished, we each individually decided whether each of the charities we’d conducted a medium review on at any time (including only in the May round, in both rounds, and only in the current round) should be a top recommendation, a standout charity, or neither, or if we were not certain. We compared lists and discussed each group. In some cases we were very certain and agreed with each other; in others we disagreed, or one or both of us were not sure what should happen. After our first conversation on the subject, we did not have any passionate disagreements, but there were some groups we hadn’t reached a conclusion on. In these cases we continued to think and talk about whether we should recommend them as top or standout charities at our weekly meetings until we reached a conclusion. We accumulated and processed additional information while writing the reviews, which helped us reach decisions about groups we weren’t certain about at first. We’re limited in how much detail we can provide about this decision process, since most of it had to do with specific aspects of individual organizations. We knew how we planned to categorize each group before we reached out to any about publication of their review.

After this round, we had 3 top charities, including the 2 top charities we had at the start of the round. We also had 4 standout organizations, including the 2 we had at the start of the round. We had decided during the course of the round that we did not want to place a cap on the number of standout charities; this number could continue to grow in following rounds. We wanted the number of top charities to remain small, so that our recommendations provide a clear call to action. We don’t expect the number of top charities to grow much in the future.

Additional Information

Updated Recommendations: December 2014

Archive: December 2014 List of Considered Charities

Our Thinking on Upcoming Recommendations

Our Thinking About the Process Leading to Our December 2014 Recommendations

Things that Surprised Us about the December 2014 Review Process